Digital Cities are changing the way we live, work and play. We’ve seen a recent focus on the idea of creating sustainable digital cities, which address how technology can be used to improve quality of life while reducing greenhouse gas emissions. But what is a digital city? In this article, you’ll learn all about the technology behind them and what they’re capable of.

What is a Digital City?

A digital city is a city that uses digital technologies to improve the quality of life of its citizens. The goal of a digital city is to use technology to make the city more efficient, sustainable, and livable. There are many different types of digital city initiatives, but they all share the same goal of using technology to make the city a better place to live.

Digital cities use a variety of different technologies to achieve their goals. Some of these technologies include:

-Sensors: Sensors are used to collect data about the city. This data can be used to monitor traffic, pollution, and other aspects of the city.

-Smart grids: Smart grids are used to manage the flow of electricity in the city. Smart grids can help reduce blackouts and brownouts, and can also help save energy.

-Smart buildings: Smart buildings use sensors and other technologies to automate heating, cooling, and lighting. This can save energy and improve the comfort of building occupants.

-Intelligent transportation systems: Intelligent transportation systems are used to manage traffic flow in the city. These systems can help reduce congestion and improve the efficiency of the city’s transportation network.

The Platform Approach to build a Digital City

Digital cities are built on a platform approach that enables different applications and services to be delivered through a shared infrastructure. The key components of a digital city platform include:

-An open data portal that provides access to city data and information

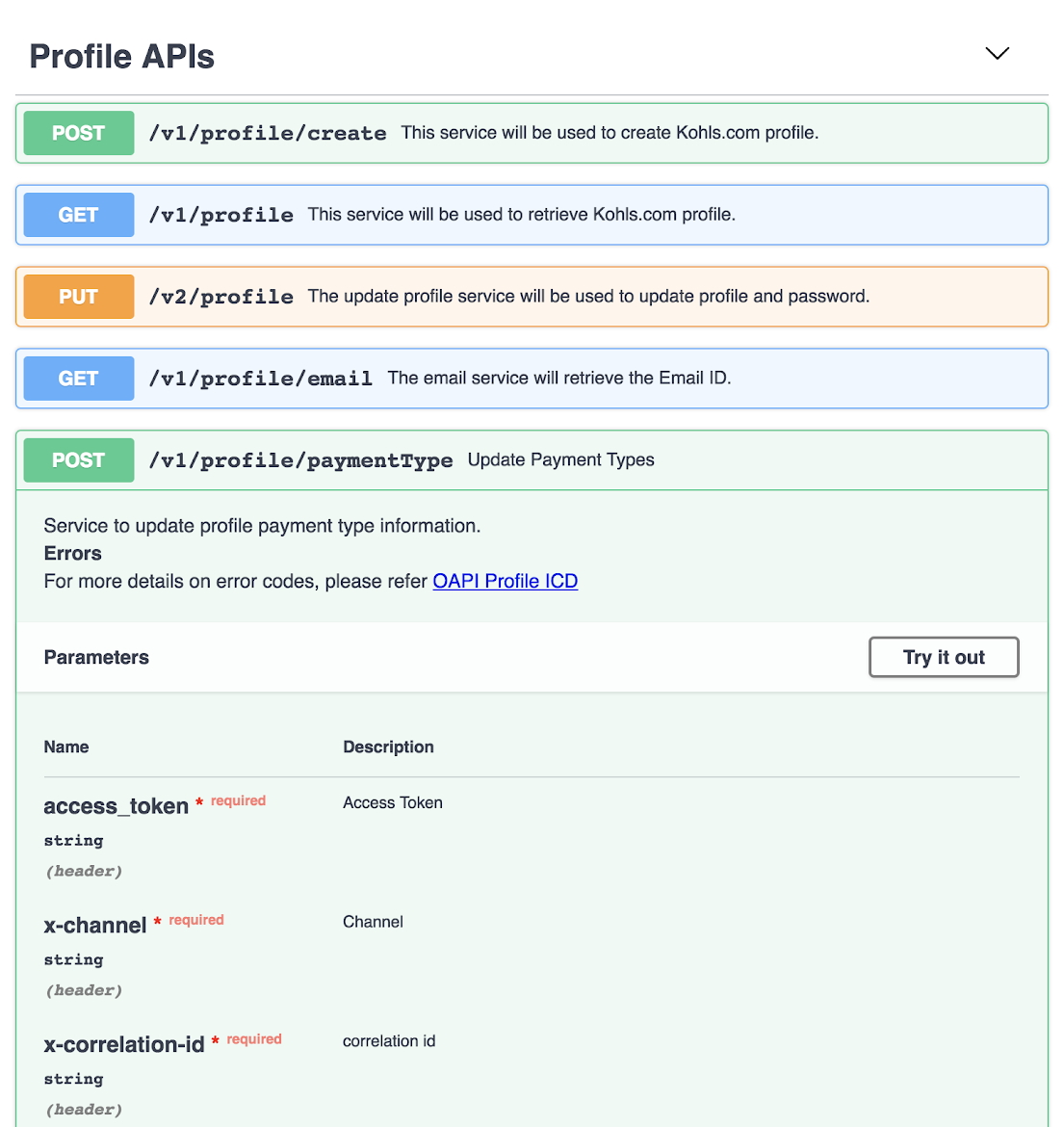

-A set of APIs that allow different applications to interoperate

-A cloud-based infrastructure that delivers scalability and flexibility

The advantages of this approach include lower costs, faster deployment of new services, and the ability to create an ecosystem of innovation around the city platform.

Pillars of Digital City

1. Connected Infrastructure:

A smart city is one with a digital infrastructure that allows for the easy flow of information and communication between city systems and its residents. This infrastructure must be secure and reliable in order to protect the data of both the city and its citizens.

2. Intelligent Transportation:

A key pillar of smart cities is intelligent transportation. This includes everything from real-time traffic monitoring to self-driving vehicles. By using data and technology to improve the efficiency of transportation, cities can reduce congestion, pollution, and accidents.

3. Sustainable Energy:

Smart cities use data and technology to make their energy usage more sustainable. This can include things like renewable energy sources, energy storage, and smart grids. By using sustainable energy, cities can reduce their carbon footprint and save money in the long run.

4. Resilient Buildings:

Smart buildings are those that are designed to be resilient to extreme weather events and other emergencies. They use things like sensors, big data, and AI to monitor conditions inside and outside the building. This information can then be used to make necessary adjustments to keep people safe and comfortable during an emergency.

5. Healthy Citizens:

A healthy citizenry is essential for any city to function

What is the Technological Components of a Digital City?

Digital cities are increasingly becoming a reality as more and more municipalities adopt the technology needed to create them. But what exactly is a digital city, and what are the technological components that make it up?

A digital city is an urban area that uses digital technologies to improve the livability, workability, and sustainability of the city. This can include everything from using sensors to monitor traffic and air quality, to using big data to make better decisions about city planning, to providing free public Wi-Fi.

The technological components of a digital city vary depending on the specific goals and needs of the municipality, but there are some common themes. These include:

–Sensors: Sensors are used to collect data about various aspects of the city, such as traffic patterns, air quality, and weather conditions. This data can be used to improve the efficiency of city operations and services.

-Big Data: Big data is a term used to describe the large amounts of data that are generated by sensors and other sources. This data can be used to identify trends and patterns, which can be used to make better decisions about city planning and service delivery.

-Crowdsensing: Crowdsensing is another simple way for cities to obtain data from their citizens. In this form of crowdsourcing, users voluntarily participate in a collaborative effort that helps make better use of the data.

-Open Data: Open data is a concept where governments release public data for use by their citizens, who can then create innovative applications and services based on that data. One of the most exciting aspects of open data is that it creates opportunities for citizen-to-citizen engagement and collaboration.-Internet of Things: The

-Public Wi-Fi: Public Wi-Fi is one of the simplest ways for a city to provide quick access to information and services for its citizens. Public Wi-Fi not only has social benefits, but can also be used by cities to , empower citizens with information.

–Digital Democracy: Digital democracy describes the implementation of digital technology by citizens to increase their participation in the democratic process. Digital democracy encompasses both online activism for political causes and the use of social media for political action.

Conclusion

Digital cities are becoming increasingly popular as more and more people move to urban areas. These cities use technology to improve the quality of life for residents and make the city more efficient. If you’re interested in learning more about digital cities and the technology behind them, this article has provided a good introduction.